The Gist

Whether for personal gain or to better humanity, the goal of many security researchers is to find bugs. The current state of the art tells us that fuzzing is one of the best methods to do this. AFL is a fuzzer that has proven to be particularly useful because it approaches fuzzing with a unique twist: it mutates program inputs with a genetic algorithm that uses execution traces from previous iterations to maximize code coverage over the life of the fuzzing session.

Another appealing characteristic of AFL is that it requires very little knowledge about the target to begin fuzzing. Other popular fuzzers like Peach or scuzzy require intricate knowledge of the target protocol, or file format, to effectively fuzz their targets. AFL’s genetic algorithm and compile time instrumentation means that any open source software can be fuzzed with similar or more rigor as a format aware fuzzer with just a few sample inputs. However, one major downside of AFL is that it’s only really designed to work with programs that accept input through stdin or a file.

This blog post will discuss extending AFL to fuzz programs with network-based inputs, using the nginx version 1.11.1 web server as an example. We will cover the use of preeny to redirect socket input to stdin, modifications to nginx that allow for prompt execution and the use of AFL’s forkserver, and some simple yet effective nginx patches to speed up the fuzzing process.

The Goal

Web servers, such as nginx, are the backbone of the modern internet. As such, particular attention must be paid to the security of these servers. AFL arms us with another tool to dissect and examine these critical pieces of infrastructure. However, the challenge with using AFL to fuzz these servers is that they only accept input over network sockets, and AFL doesn’t support sockets-based input. Several successful attempts have been made to patch networked programs to support fuzzing with AFL, but suffer from either high complexity or poor performance.[1][2] We will present advancements to these existing techniques that address the ease of use and performance issues. Our approach focused on identifying an efficient procedure for supplying inputs to a single HTTP request.

The Method

The first step is to modify nginx to exit after servicing exactly one http request. This will create a fresh instance of nginx per fuzz iteration, which ensures that nginx exits properly and promptly on each input. Another benefit is that the majority of code exercised in each iteration will be strongly correlated to the input value, which allows us to find vulnerabilities that are more likely the result of our input than something else. This is extremely important because preemptively terminating the process could cause AFL to miss potentially exploitable bugs.

After scouring the code we determined that the appropriate place to force nginx to exit is in the ngx_single_process_cycle function of os/unix/nginx_process_cycle.c. At line 309 of this file is a call to a function that is responsible for processing queued events.

306. for ( ;; ) {

307. ngx_log_debug0(NGX_LOG_DEBUG_EVENT, cycle->log, 0, "worker cycle");

308.

309. ngx_process_events_and_timers(cycle);

310.

311. if (ngx_terminate || ngx_quit) {

312.

313. for (i = 0; cycle->modules[i]; i++) {

314. if (cycle->modules[i]->exit_process) {

315. cycle->modules[i]->exit_process(cycle);

316. }

317. }

318.

319. ngx_master_process_exit(cycle);

320. }

321.

322. if (ngx_reconfigure) {

323. ngx_reconfigure = 0;

324. ngx_log_error(NGX_LOG_NOTICE, cycle->log, 0, "reconfiguring");

325.

326. cycle = ngx_init_cycle(cycle);

327. if (cycle == NULL) {

328. cycle = (ngx_cycle_t *) ngx_cycle;

329. continue;

330. }

331.

332. ngx_cycle = cycle;

333. }

334.

334. if (ngx_reopen) {

336. ngx_reopen = 0;

337. ngx_log_error(NGX_LOG_NOTICE, cycle->log, 0, "reopening logs");

338. ngx_reopen_files(cycle, (ngx_uid_t) -1);

339. }

340. /* New exit(0); to force close the server after one execution */

341. exit(0);

342. }

When nginx accepts a connection, it processes it as an event using (void) ngx_process_events which is a function pointer that is cast based on the request processing method defined at compile time. For this exercise, we will be using the select module to handle incoming connections. We place our call to exit(0) outside this function in order to keep our nginx modifications as generic as possible, so as to allow us to fuzz whatever nginx modules we want. Looking back inside the for loop, we want to find a suitable place for us to “cleanly” exit the program. Since we just want to process one connection, and therefore one event, we will place the call to exit(0) at the end of the for loop.

However, when we run our modified nginx, the server accepts our request, but exits before it can process it and return to us our web page. As it turns out, nginx treats both receiving and serving a request as events. A simple fix is to add a small counter to the for loop allowing it to execute two full iterations before exiting.

$ LD_PRELOAD=<path to desock>/desock.so ./<path to nginx>/nginx

GET /

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

Pro Tip: For those of you who may not have worked with servers before, a good place to start looking for places to modify code is around infinite loops and calls to select() or accept().

Now that nginx successfully exits after serving one request, we can investigate getting AFL to feed inputs to nginx. One option would be to patch nginx to force its call to accept() to be handled through stdin. However, this process requires a very thorough understanding of the target, which increases the time it takes to write a test harness. But luckily for us, there’s an awesome toolset we can use to bypass this painful process!

Preeny is a very useful collection of shared objects designed to be preloaded into a target program’s address space. They provide a wide array of functionality, but we are particularly interested in the desock tool. With this tool, we don’t have to worry about manually intercepting the sockets because the shared object does all the heavy lifting involved in hooking networking functions and injecting input from stdin. Another benefit of using desock.so is that it requires no code modification of the target, so it can be used quite generically on any server that accepts socket-based input. If you want to learn more about how it works, I highly suggest looking at the github for the project!

Once we have downloaded and compiled our desock.so, we will test it by preloading it when executing our target.

$ LD_PRELOAD=<path to desock>/desock.so ./<path to nginx>/nginx

GET /

<!DOCTYPE html>

<html>

<head>

<title>Welcome to nginx!</title>

<style>

body {

width: 35em;

margin: 0 auto;

font-family: Tahoma, Verdana, Arial, sans-serif;

}

</style>

</head>

<body>

<h1>Welcome to nginx!</h1>

<p>If you see this page, the nginx web server is successfully installed and

working. Further configuration is required.</p>

<p>For online documentation and support please refer to

<a href="http://nginx.org/">nginx.org</a>.<br/>

Commercial support is available at

<a href="http://nginx.com/">nginx.com</a>.</p>

<p><em>Thank you for using nginx.</em></p>

</body>

</html>

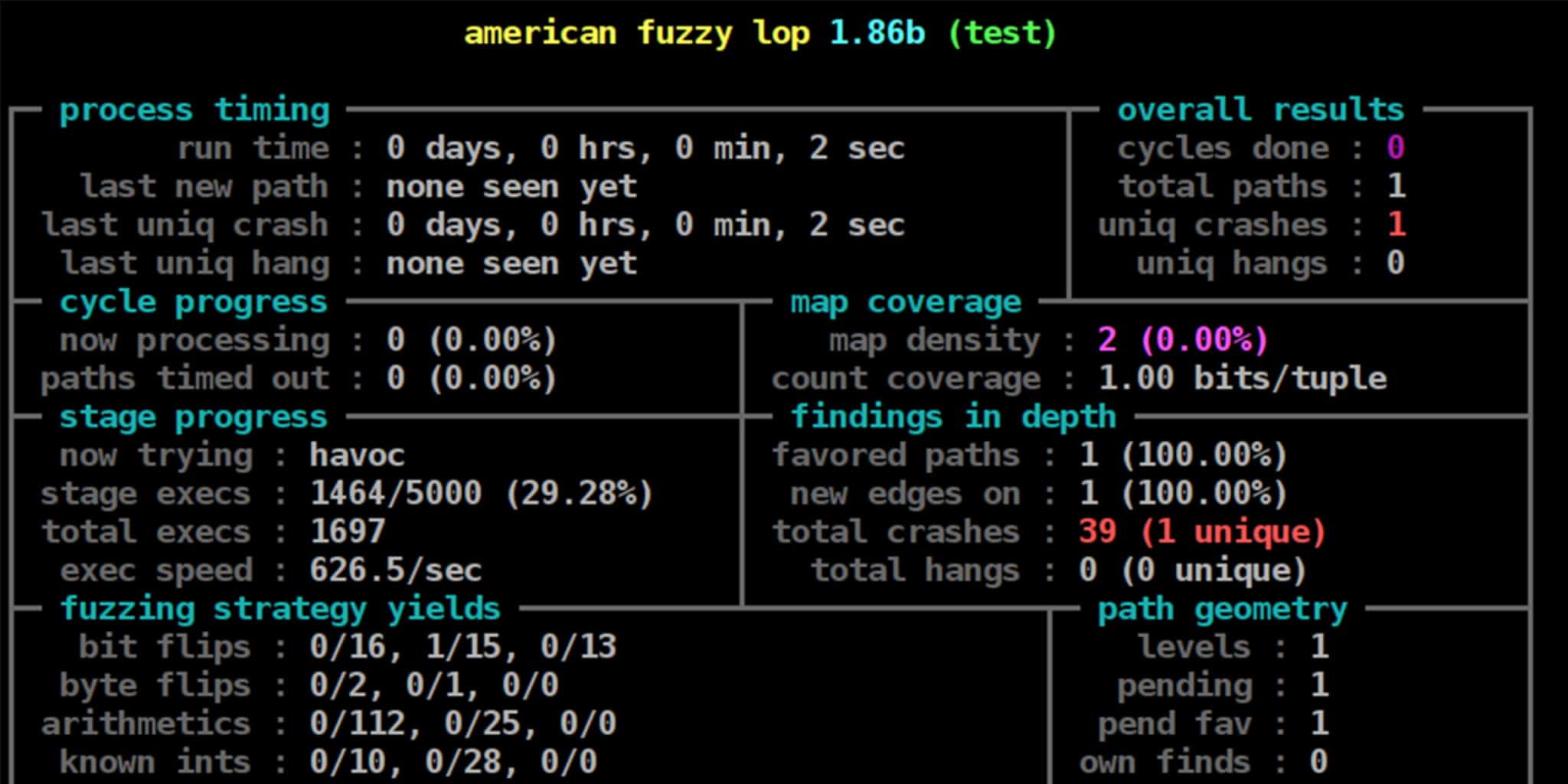

Success! However, when fuzzing we only got a measly ~50-70 exec/s on a Ubuntu 16.04 VM on a Macbook Pro. At this speed surely we’d be better off using some other fuzzer! Our challenge now is to find some way to raise this pathetically low number.

First and foremost, the compiler used to instrument the binary is very important. Our initial tests were done using afl-gcc since the nginx documentation instructed us to use gcc for compilation. However, after instrumenting nginx using AFL’s experimental afl-clang-fast compiler, the execution rate only marginally increased to ~80-90 exec/s – still not good enough!

The next step to speeding up our iterations required tinkering with the source code again, but with very little effort this time. Ideally, AFL’s persistent mode would be a good way to improve performance. Unfortunately, nginx has far too many moving parts (e.g. signals and file descriptors) to efficiently make use of AFL’s persistent mode; however, there is still a small gem in the AFL toolbox for us to make use of, the deferred forkserver! This little macro allows the user to manually specify where to inject the AFL forkserver entry point, which solves the problem we had with persistent mode. Now we can simply put the forkserver entry point later after everything has been initialized.

Finding the best place to drop the entry point was a mix of reading source code in conjunction with a little guess and check. Most of the time, since the forkserver’s entry defaults to main, we can assume that we’ll want to put the new entry point somewhere in main. At the end of the day, I ended up dropping it right before nginx_init_cycle() in core/nginx.c.

272. if (ngx_preinit_modules() != NGX_OK) {

273. return 1;

274. }

275.

276. #ifdef AFL_SHIM

277. __AFL_INIT();

278. #endif

279.

280. cycle = ngx_init_cycle(&init_cycle);

After this small modification, we got a whopping boost up to ~150-170 exec/s! Even though it’s not in the typical AFL range of thousands of exec/s, this three fold increase will certainly help on the hunt for bugs!