We run many social media advertising campaigns at Two Six soliciting information and survey responses. How we present our ads affects the level and types of responses we get. There are several commercial tools to analyze the results of online ad campaigns. However, what is more important than the solutions are the questions that you ask the data. In this post, we present results from two ad campaigns in West Africa and walk through the thought process of defining and determining the “best” ad.

| Fence | Harvest | Mud |

|---|---|---|

| Ad (AB test group) | Cost | Impressions | Reach | Facebook Clicks | Page hits | Consents | Completions |

|---|---|---|---|---|---|---|---|

| Food (1) | 224.57 | 205929 | 77614 | 1573 | 1698 | 86 | 52 |

| Fuel (2) | 224.58 | 169041 | 89753 | 2078 | 1832 | 162 | 113 |

| Store (1) | 224.57 | 206076 | 67495 | 1408 | 1351 | 133 | 83 |

| Fence (2) | 224.57 | 219230 | 98629 | 2111 | 2421 | 286 | 200 |

| Vendor (1) | 224.57 | 202235 | 74050 | 1547 | 1692 | 135 | 99 |

| Harvest (1) | 224.57 | 184251 | 72473 | 2039 | 1934 | 190 | 144 |

| Market (2) | 224.57 | 165922 | 87570 | 2329 | 1908 | 158 | 102 |

| Mud (1) | 224.58 | 171789 | 81173 | 3140 | 2892 | 140 | 93 |

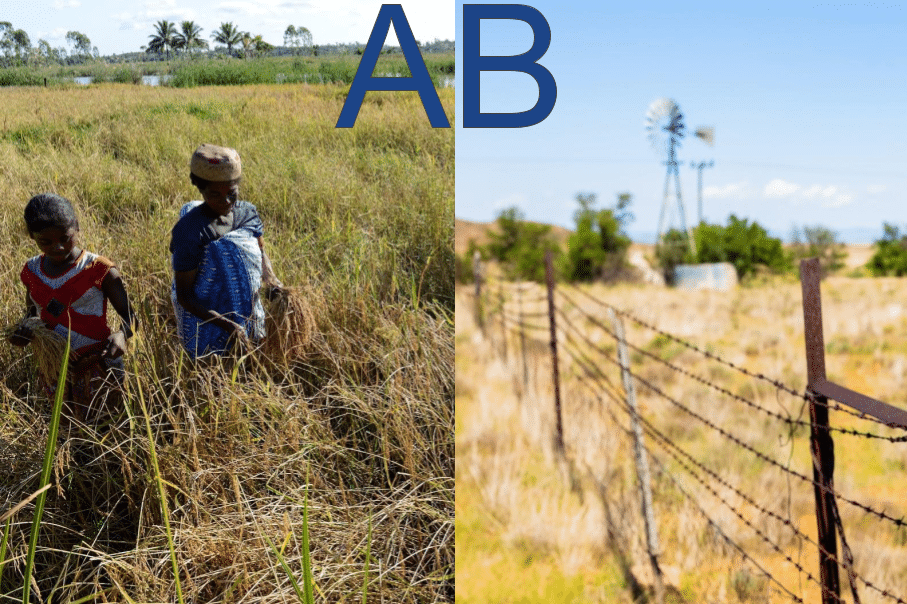

In August, we ran a survey in Zamfara, Nigeria, Africa about the current prices of rice, vegetables, and beef, where the respondents thought the prices would be six weeks from now, and why. We ran eight different ads on Facebook, intending to highlight different localized facets of food production and delivery to marketplaces as well as represent differences in polarity (positive vs. negative, convenient vs. inconvenient, etc.). We show three examples in Figure 1. In Table 1, we list the cost, impressions (number of times Meta shows the ad), clicks reported by Meta, and the page hits (number of times a survey was opened), consents, and completions from the surveys. We opted for cost per (link) click, meaning we only were charged when a user clicked on the link in our ad. However, Facebook states that its “ad auction system selects the best ads to run based on the ads’ maximum bids and ad performance” (Meta Business Help Center). So our ads, even though they cost the same, have different amounts of clicks, and may be shown to audiences of different sizes. Meta also includes an A/B testing framework where an advertiser puts their ads in an A/B test group, and Meta shows those ads to different populations. In other words, a person will not see two ads in the same A/B test group. At the time of writing this, the limit to the number of ads that may be featured in an A/B test group was five. So, we broke the eight ads into two different A/B test groups.

Meta lets you choose a metric for ad budget optimization, the most common one being cost-per-click. Since all of our ads had essentially the same cost, this boils down to the ad that had the most clicks. Following this metric, Meta would tell you that our “Mud” ad is the best because it has the best cost-per-click ratio. However, looking at the survey data, the ad only turns 3% of its clicks into survey completions. Only 4.5% of people who click on the ”Mud” ad even consent to taking the survey! While this ad grabs users’ clicks, it does not inspire many to finish the survey.

So instead, we look at the ad with the highest number of completions. Along this dimension, the “Fence” ad seems to be the clear winner. However, I argue that the “Harvest” ad, if not the best, is at least comparable to “Fence.” First, “Fence” has a 36% larger reach than “Harvest.” Second, for “Fence,” The number of page hits is higher than the number of Facebook clicks. This indicates that at least 300 people made it to the survey connected to “Fence” by means other than clicking an ad Facebook posted. Most often, this means someone shared the ad with their friends. People who come to the survey through shared posts are more likely to complete the survey than people who click the Facebook ad. Both of these above factors are independent of the quality of the ad and could explain the extra completions that “Fence” has over “Harvest.” In this case, the top ad is either “Fence” or “Harvest,” but further tests would be needed to see which one is better.

| Ad (AB test group) | Cost | Impressions | Reach | Facebook Clicks | Page hits | Consents | Completions |

|---|---|---|---|---|---|---|---|

| Fuel (1) | 120.00 | 84888 | 52641 | 1102 | 1041 | 87 | 66 |

| Store (2) | 120.00 | 115120 | 50844 | 965 | 910 | 67 | 46 |

| Fence (2) | 240.00 | 208526 | 78509 | 1750 | 1502 | 156 | 100 |

| Vendor (3) | 240.00 | 229022 | 85706 | 1628 | 1364 | 110 | 64 |

| Harvest (1) | 240.00 | 181207 | 89921 | 2125 | 1669 | 119 | 78 |

| Mud (3) | 120.00 | 84952 | 55231 | 1374 | 1251 | 41 | 32 |

We ran a similar survey in Sikasso, Mali in November 2023. We varied the amount of money allocated to the ads, apportioning more money to the best ads in previous campaigns in Nigeria. In this campaign, some similar patterns emerge as the campaign from Zamfara. “Mud” once again features a lot of clicks (per unit cost) but a dismal completion rate. And as before, the “Fence” ad has the most completions.

In this case, “Fence” is undisputedly better than “Harvest,” as it has less reach as well as fewer clicks and page hits, but still more completions than “Harvest.” However, the competition for the best ad is between “Fuel” and “Fence.” What makes this competition tricky is that, while we spent twice as much on “Fence” than on “Fuel,” we only got 49% more reach and 59% more clicks. This tracks with another general observation we have made, which is that ads with smaller budgets have a better cost-per-click (or, put another way, that increasing ad budgets offer diminishing returns).

The next thing we can look at is the completion-to-clicks rate, defined as the ratio of the number of survey completions to the number of ad clicks. “Fuel” has a 6.0% completion rate and “Fence” has a 5.7% completion rate. At this point we ask, is this too close to call or a significant difference? If we model the completion rate as a beta distribution, we can calculate the probability that the completion rate of “Fuel” is higher than that of Fence using this formula. Using this approach, there is a 62.8% chance that “Fuel” has a higher compilation rate than “Fence,” which is not a significant difference. Once again, we cannot tell which is the best-performing ad!

In conclusion, determining the best ad from Facebook data and survey completion data is not as straightforward as looking at which ad has the best cost-per-click or cost-per-completion. There may be exogenous factors, such as social sharing of survey links, as well as other factors introduced by Meta’s advertising system. Moreover, defining the ideal metric can be trickier than it initially seems. Hopefully, walking through this analysis inspires insights into your data.

Acknowledgements

This work was supported by the Defense Advanced Research Projects Agency (DARPA) and the Army Research Laboratory under Contract No. [W911NF-21-C-0007] under the HABITUS program (https://www.darpa.mil/program/habitus). Any opinions, findings and conclusions or recommendations expressed in this material are those of the authors and do not necessarily reflect the views of the Defense Advanced Research Projects Agency (DARPA), the Department of Defense, or the United States Government.

Distribution Statement A. Approved for public release: distribution is unlimited.