Introduction

During my summer internship, I had the opportunity to work with the Data Science branch of the Advanced Analytics team. One of the team’s goals is to create a machine learning model that can provide meaningful information and insight into raw source code documents. Before I arrived, the team had already designed a model that could automatically give semantic labels to source code documents. This model was trained on source code taken from Stack Overflow in order to predict the associated tags for each Stack Overflow post. For example, when given an unlabeled source code document, the model can predict tags such as “python” or “database” that help describe the contents of the document.

My task was to start researching possible ways to achieve the project’s next goal: automatically dividing a source code document into logical chunks. To gain intuition as to why this might be important, imagine you wanted to search for code that completes task ‘X’ and you had access to a source code corpus or library. The current model would be able to return a relevant source code file (i.e., one in which task ‘X’ is completed), but you would have to search for what parts of the file are relevant. Having a program that could divide source code into logical chunks could make the process of obtaining relevant source code faster, smoother, and more sensible. This ‘intelligent’ parsing ability could then also serve as a basis for developing further insight into source code corpora.

Approaching the Problem

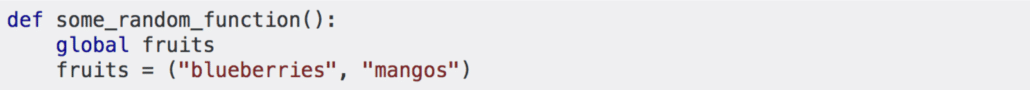

Due to the complexity of source code and the difficulty of obtaining ground truth data of code divided into logical chunks, I focused on first developing a solution for a simpler analogous problem. It was thought that being able to predict period locations within text would be an appropriate analogous task because periods represent the demarcation of logical linguistic structure. If an algorithm could learn how to group words into sentences, this process could then be easily transferred to dividing code into logical chunks. There are also many large, well-structured datasets in the field of natural language processing since it is a very popular subject area within the machine learning community. This means that the period prediction task would also allow us to start developing a model using good ground-truth data.

Google’s Billion Words

To develop a model for breaking text into logical chunks via period prediction, I utilized Google’s “Billion Words” corpus (which can be found here) because it provided a large dataset with clear ground-truth data. This dataset consists of many sentences (taken from various news sources) that I then concatenated together. Compared to general source code, the “Billion Words” corpus provides a narrower scope and more consistent logical block lengths. This is important because there exists less variation in the data, which is great for initial forays into model architecture research. Below is a sample of sentences taken from the Billion Words corpus and then concatenated together.

“The exceptional-quality standard doesn’t include testing for some potentially harmful metals and other chemicals that can appear in sludge, he said. What about her oversight of her state’s National Guard contingent? That’s a question that a lot of novelists ask themselves while writing. They are inclined to run a mile from this kind of issue, at least for now.”

The Data

Before we delve into the model architecture, let’s first look at how the training data was created from these concatenated sentences. To develop training data, a sequence length of 100 characters was slid across the corpus with a stride size of 100, so each training sample was an array of length 100 with a character at each location and the periods replaced with a whitespace. The inputs are fixed length because it allows the data to be trained on common machine learning architectures such as LSTM’s and CNN’s. Only periods that mark end of sentences were replaced (e.g. periods used in phrases like “U.S.” and “Mr.” were not predicted on). The label array is also of size 100 where a 1 indicates a period at the location and a 0 is present otherwise. Note: the example sentence below was not taken from the corpus, but rather created by me for demonstration purposes. Also, the sequence length below is 10 not 100 to aid readability.

Original Sequence

"i am good.you are bad.he did that.""

Sequence length = 10

Training sample #1

['i',' ','a','m',' ','g','o','o','d','.']

^

period

Label sample #1

[ 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 , 1 ]

Training sample #2

['y','o','u',' ','a','r','e',' ','b','a']

Label sample #2

[ 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 ]

Training sample #3

['d','.','h','e',' ','d','i','d',' ','t']

^

period

Label sample #3

[ 0 , 1 , 0 , 0 , 0 , 0 , 0 , 0 , 0 , 0 ]

Usage of LSTM

Because language comprehension lies at the core of this task, the model was predicated on the use of the Long Short Term Memory (LSTM) architecture. The LSTM is state-of-the-art when it comes to natural language processing and is able to learn long-term dependencies between time steps because it maintains what is referred to as its “cell state.” The cell state exists at each time step and is updated at each time step so that the network can keep important information relevant to that time step and dispose of irrelevant information. This ability to learn which information should be kept and forgotten is what makes the LSTM architecture great for language tasks. A further explanation of LSTM’s can be found here.

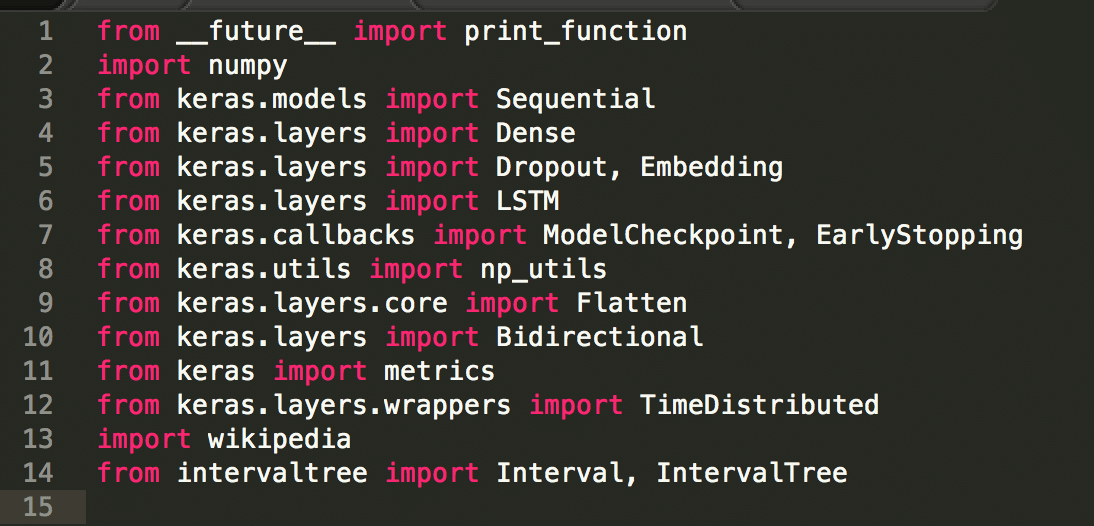

The Model

The first build of the model only included a single LSTM layer, and wasn’t able to offer much insight. Throughout the process of adding layers and making tweaks to the model, binary accuracy was used as the metric to numerically evaluate the model’s success. The binary accuracy metric looks at every character location and checks if the model correctly correctly the presence or absence of a period. Binary cross entropy was the loss function used to train the model. Below is the Keras implementation and description of the best performing architecture.

n_vocab = 256 num_lstm_units = 256 bimodel = Sequential() bimodel.add(Embedding(n_vocab,20, input_length =100)) bimodel.add(Dropout(.2)) bimodel.add(Bidirectional(LSTM(num_lstm_units, return_sequences=True))) bimodel.add(Dropout(.2)) bimodel.add(TimeDistributed(Dense(150, activation='relu'))) bimodel.add(Dropout(.2)) bimodel.add(TimeDistributed(Dense(75, activation = 'relu'))) bimodel.add(Dropout(.2)) bimodel.add(TimeDistributed(Dense(1, activation = 'sigmoid'))) bimodel.add(Flatten())

An embedding layer was used first, which optimized the character embeddings based on the goal of the model. Although word embeddings might be a better approach when dealing solely with period prediction, I elected not to use them because the end goal was to apply this model to source code. Because of different languages and user-created variable names, source code contains very large vocabularies, which would make word embeddings difficult to train. Thus, character embeddings were utilized, even though they might not be the best approach for this specific case.

A bidirectional LSTM layer follows these embeddings. The bidirectional component means that two LSTMs are trained, one that feeds the data forwards and one that feeds the data in backwards, with the results then concatenated together. As mentioned earlier, this layer is the core of this model because it is the part that learns how to “comprehend” the language. At a high level, a bidirectional LSTM allows for future information to be used in conjunction with past information (whereas a standard LSTM only considers past information). If a human were to try and determine the location of a missing period, they would most likely “look ahead” and use future information to piece together a prediction for the period’s location. For example, a human could recognize that the subject at the beginning of a phrase is different than the one at the end, and this could help them piece together the proper location for the period.

Next, the information gathered by the LSTM is condensed using two fully connected dense layers. These layers act as the “decision makers,” using the output information from the LSTM to decide which locations should have a period.

Finally, the output is flattened so that the input dimensions match the output dimensions, which allows the model to properly compile in Keras. Dropout was used between all the layers as a regularization technique to protect against overfitting on the training set.

Results

After training on approximately 30 million training samples (which took about 27 hours on one Nvidia 1080 GPU), the model was able to deliver some meaningful results. Its binary accuracy on the test set was about 99.68%. As a comparison metric, periods represent around 1% of characters, so if the model predicted no divisions between sentences, it would achieve a binary accuracy of 99%. Below is the precision recall curve for this model and some textual examples. The precision-recall metric was used because the nature of this problem requires that the bulk of locations do not contain a period. Precision-recall shows whether these rare cases are learned and predicted correctly.

Numerically the model showed some promising results, so next I wanted to see how well the model could perform on real text. Here’s a paragraph taken from the “Machine Learning” wikipedia page in which I removed the periods and had the model predict their locations.

Sample Paragraph:

Machine learning is closely related to (and often overlaps with) computational statistics, which also focuses on prediction-making through the use of computers. It has strong ties to mathematical optimization, which delivers methods, theory and application domains to the field. Machine learning is sometimes conflated with data mining, where the latter subfield focuses more on exploratory data analysis and is known as unsupervised learning. Machine learning can also be unsupervised and be used to learn and establish baseline behavioral profiles for various entities and then used to find meaningful anomalies. Now this is a completely different sentence

Model Prediction:

Machine learning is closely related to (and often overlaps with) computational statistics, which also focuses on prediction-making through the use of computers. It has strong ties to mathematical optimization, which delivers methods, theory and application domains to the field. Machine learning is sometimes conflated with data mining, where the latter subfield focuses more on exploratory data analysis and is known as unsupervised. Learning machine learning can also be unsupervised and be used to learn and establish baseline behavioral profiles for various entities and then used to find meaningful anomalies. Now this is a completely different sentence

It turns out the model did pretty well, only missing the third period location by one word. The argument could even be made that a human who did not have in-depth knowledge of machine learning might have misplaced that period, as well. Also, as you might have guessed, the last sentence was not part of the original paragraph. I added it to see if the model could predict the last period location, given sufficient context. When fed discrete-sized inputs, the model does have trouble predicting periods at the very end of inputs, presumably due to a lack of context.

As a way to gain deeper insight into the model’s thought process, I shaded the background of each character according to how confident the model was that a period belongs at that location. This allows us to see the other potential locations, as well as possible areas of confusion. Below are the results.

Model Mishaps

To gain more insight regarding the type of errors the model was making, I extracted some of the sentences that it missed. Here is a disconcerting example in which the model fails to recognize semantic norms of the English language. It did not recognize that ending the sentence at “restricts” is not a complete thought and does not make logical sense.

Ground Truth:

Ohio gov. ted strickland signed in june a law that restricts the annual percentage rate that lenders can charge to 28 percent, and limits the number of loans customers can take to four per year.

Model Prediction:

ohio gov. ted strickland signed in june a law that restricts.

the annual percentage rate that lenders can charge to 28 percent, and limits the number of loans customers can take to four per year.

On the other hand, here’s where the model should not be faulted for its mistake. Instead of combining both phrases into one sentence, the model chose to separate the phrase into two sentences that both make logical sense.

Ground Truth:

A college cornerback might play very well on tape, but that doesn’t mean a talent evaluator can be certain the cornerback will play very well in the nfl.

Model Prediction:

A college cornerback might play very well on tape, but that doesn’t mean a talent evaluator can be certain.

The cornerback will play very well in the nfl.

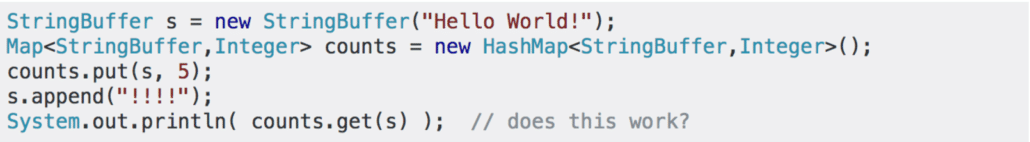

Extending the Model to Source Code

Learning to Discern Logical Code Blocks

Now that we have a working model that performs well on the analogous task of period prediction, the next step is to apply this model to our ultimate goal of recognizing logical segments in code. Before delving into the depths of the new problem, let’s first gain deeper understanding about what it means for a set of lines to be a logical code chunk. Below is an example taken from Stack Overflow. Notice how the two boxes of code do very different tasks. One defines a function and the other achieves some sort of printing task. These are two different logical code blocks, and are what the model should be able to distinguish. It is also important to note that while one of them is a function, the other one is not, so standard parsers would not have picked up on both logical blocks.

The Data

Code snippets taken from Stack Overflow posts were used as training data for this task (a diagram of an example post can be seen below). Once downloaded, they were concatenated together with a newline character and the model was then trained to predict which newline characters marked the division between two code snippets. Training samples and labels were generated in the same way as the period prediction task with a sequence length and stride size of 100 characters. The locations in the label samples that contained the delimiting newlines held a ‘1’ and all other locations held a ‘0’.

However, before the model could train, some issues with the data had to be addressed. The first issue with the Stack Overflow data is that it contains many languages and topics of discussion. To limit the complexity of the problem, I decided to only use Stack Overflow posts that contain the “python” tag. Since python is very close to pseudocode and hence the English language, it was thought that transferring the model to predict on this language would be a solid first step.

Within this subset, another problem emerged: lengths of each code sample were not evenly distributed. In this context, length refers to the number of lines a code snippet contains. The graph below shows this distribution of snippet length; more than half of all posts were just a single line. This could be a problem, because it could cause the model to take the distribution into account too heavily (and predict that each line is itself a logical chunk), which could later cause some overfitting problems. To avoid this, only samples with more than 3 lines were included in the training data.

Results

In total, 250 million characters were trained on, thus creating 2.5 million samples each with length 100 characters. On the test set, the model achieved binary accuracy of 99.96%. As a comparison, the frequency of delimiting newline character is 0.17%, so if the model predicted no divisions, it would have achieved a binary accuracy of 99.83%. Below is the precision-recall curve.

Here is an example of the model making correct divisions on the Stack Overflow data. Notice how the model was able to distinguish between the three segments. It was able to recognize that the line “edit_files = []” signified the start of a new logical code block that was accomplishing a different task as the code before it. Then when the model saw the “<names=noaction>” tag it recognized that this piece of code marked the start of yet another logically different task.

Segment divisions are demarcated with a line of dashes (—————–)

----------------------------------------------------------------------------------------------------

import operator

from django.db.models import Q

import django_filters

class MultiFieldFilter(django_filters.Filter):

def __init__(self,names,*args,**kwargs):

if len(args) == 0:

kwargs['name'] = names[0]

self.token_prefix = kwargs.pop('token_prefix','')

self.token_suffix = kwargs.pop('token_suffix','')

self.token_reducer = kwargs.pop('token_reducer',operator.and_)

self.names = names

django_filters.Filter.__init__(self,*args,**kwargs)

def filter(self,qs,value):

if value not in (None,''):

tokens = value.split(',')

return qs.filter(

reduce(

self.token_reducer,

[

reduce(

operator.or_,

[Q(**{

'%s__icontains'%name:

(self.token_prefix+token+self.token_suffix)})

for name in self.names])

for token in tokens]))

return qs

----------------------------------------------------------------------------------------------------

edit_files = []

with open('C:UsersrgriffinDesktopreplace.txt', 'r' )as f:

for line in f:

l = line.partition(',')[0]

e = l.replace('#(', '')

r = e.replace('U:', '//Stream/main/')

q= r.replace('', '/')

edit_files.append(q)

f.close

for i in edit_files:

p4.run("edit" , i)

----------------------------------------------------------------------------------------------------

<Names=NoAction>

iwona

karol

basia

joasia

monika

Now here is an example of where the model failed to recognize a division point. As you can see, the model should have divided the code at the start of the function declaration because this is the point in the code that begins to deal with a separate distinct task. The left side is the model’s prediction and the right side is the ground truth.

Left side is the predicted divisions and the right side is the ground truth.

Real Life Source Code

Remember that the end goal of this research was to find a model that works on actual source code corpora. So, to test the ultimate success and utility of this model, I ran it on source code that I wrote myself. In fact, the source code I tested on was actually the code used to generate the test itself. Below you can see the results and how the model chose to logically divide my code.

import requests

import numpy

import string

from keras.models import Sequential

from keras.layers import Dense

from keras.layers import Dropout, Embedding

from keras.layers import LSTM

from keras.layers.wrappers import TimeDistributed

from keras.callbacks import ModelCheckpoint, EarlyStopping

from keras.utils import np_utils

from keras.layers.core import Flatten,Reshape

from keras.layers import Bidirectional

from keras import metrics

f = open("source_code_test.py","r")

raw_code =f.read()

print raw_code

----------------------------------------------------------------------------------------------------

r = requests.get('https://raw.github.com/jamesob/tinychain/master/tinychain.py')

raw_code_ = r.text

printable_set = set(string.printable)

raw_code_ = ''.join([c for c in raw_code_ if c in printable_set])

#try reg exp or other method to remove newlines

raw_code = raw_code.replace('nn','n')

raw_code = raw_code.replace('nn','n')

#raw_code.replace('u26fc',' ')

# raw_code.decode("utf-8").replace(u"u26fc", "")

----------------------------------------------------------------------------------------------------

bimodel = Sequential()

bimodel.add(Embedding(256,20, input_length =None))

bimodel.add(Dropout(.2))

bimodel.add(Bidirectional(LSTM(256, return_sequences=True)))

bimodel.add(Dropout(.2))

bimodel.add(TimeDistributed(Dense(150, activation = 'relu')))

bimodel.add(Dropout(.2))

bimodel.add(TimeDistributed(Dense(75, activation = 'relu')))

bimodel.add(Dropout(.2))

bimodel.add(TimeDistributed(Dense(1, activation = 'sigmoid')))

----------------------------------------------------------------------------------------------------

filename = "/home/jdormuth/reversecrowd/trials/small-snippets/weights-improvement-09-0.0030.hdf5"

bimodel.load_weights(filename)

bimodel.compile(loss = 'binary_crossentropy', optimizer='adam')

pattern = [ord(char) for char in raw_code]

print raw_code

----------------------------------------------------------------------------------------------------

divisions = []

i = 0

[chr(value) for value in pattern]

x = numpy.reshape(pattern, (1,len(pattern))) prediction = bimodel.predict(x,verbose =3) for j in range(len(pattern)): if(prediction[0][j]> .3): divisions.append(i+j) —————————————————————————————————- def replace_str_index(text,index=0,replacement=’n—————————————————————————————————-n’): return ‘%s%s%s’%(text[:index],replacement,text[index+1:]) for x in range(len(divisions)-1,-1,-1): raw_code = replace_str_index(raw_code,divisions[x]) —————————————————————————————————- text_file = open(“source-code-test.txt”, “w”) text_file.write(raw_code) text_file.close() print r.text print len(divisions)

It turns out that the model was actually pretty successful at this source code discernment task. Before the first division, it grouped all the import statements together. Between the first and second divisions, it grouped the creation of training data and string operations. With the next two divisions, it was able to recognize the portion of code that defines and builds the model. Then it went on to recognize the loading of model weights and model compilation. Next it recognized the difference between a loop that makes the predictions on the source and the functions that put these division predictions into the code. Lastly, it saw that opening and writing to a file was the final task of this code. Although the model wasn’t “perfect” (e.g., it collected three extra lines with its import statement group), it was able to recognize and break apart the different tasks pretty well. To gain further insight into its decision process I shaded each newline corresponding to the model’s confidence that that newline was a logical breakpoint. Again, the darker the shade the more confident the model was that a newline should appear there.

Analysis and Further Exploration

One potential pitfall of the model could be that it searches for transition points rather looking for “bigger picture” content changes. For example, if it sees an import statement or class declaration, it might be relying on these occurrences to predict divisions rather than using contextual clues. A possible solution that could help the model gain “big picture” insight might be to incorporate word embeddings in lieu of the current character embeddings. It is important to note, however, that typical word embedding processes (such as Word2Vec) are infeasible for source code comprehension, due to the much higher vocabulary size than typical natural language processing tasks. In order to get around this issue, word embedding processes such as fastText and Charagram could be of use. These processes use character-level information to create word embeddings, which helps the model discern the meaning of unknown words.

It is also important to note that the source code experiments were done with only one language, Python. However, the end goal is to have an algorithm that is language-agnostic. Further exploration into incorporating multiple languages into the training data would definitely be necessary if this goal is to be achieved.

Acknowledgements

I would like to give special thanks to the members of my team (Ben Gelman, Bryan Hoyle, Jessica Moore, and David Slater) for assisting and offering insight on various tasks throughout this process. Lastly, another thanks to David Slater for supervising and guiding me through this research.