Apple’s recent move to an ARM architecture with the M1 chips in their new products has necessitated revisiting some of our Docker images to support the architecture in addition to the current Intel architecture. Our versioned builds are done using some kind of Continuous Integration (CI) platform such as CircleCI to build the container images and push them to DockerHub. While this post will focus on CircleCI, the lessons apply in general to other CI solutions as well.

The challenges in updating our images were many, starting with figuring out how to build such containers with Docker tools. A great many articles out there pointed to using “buildx” as the easy solution which allows you to set an argument listing all the architectures you want to use building your image. The reality is that for every architecture you list, a separate build thread will be spawned. If the architecture matches the host running the build, that thread will execute normally while the other threads will run through an emulator and therefore slower. As it turns out, much, much slower.

While Docker’s “buildx” tool makes building, tagging, and uploading multi-architecture images to DockerHub much simpler, that “easy” price became very costly when trying to re-create the process in CI workflows. CI workflow jobs have all manner of constraints to keep in mind: hardware resources available like machine types, CPU/GPU counts, RAM limitations, software installed on the available OSes, runtime costs, etc. They all also enforce some kind of timeouts for builds which can be adjusted based on your payment plan, but there’s always a maximum you cannot exceed. To illustrate the challenge faced by some of the more complex images, let me recount one specific use case for why I eventually abandoned use of the “buildx” tool.

The Current State of Affairs

The project I was reviewing to add M1 architecture images to was being built utilizing several Docker image layers. Each layer was built upon the previous one such that the code that rarely changed, like required OS libraries or programming language dependencies, could remain as an unchanged FROM layer during frequent code updates. This layering process allowed build times during development to shrink considerably rather than waiting several hours for a total build starting from scratch — and this wasn’t even considering adding another architecture into the mix. The base layer would take a little over two hours to build the packages it needed for the OS and a set of geographic computation libraries. One of the middle layers could easily take 45 minutes to install all the JavaScript and Python dependencies and more OS libraries it may require. The code layers would then only require a dozen or so minutes to build on top of these time-intensive layers. Development could create multiple builds a day and were happy with the resulting speed improvements over a single layer approach. Each Docker image hash-based tag for each build layer was checked for existence and created if it wasn’t found in DockerHub. The simple CI workflow was a single job to build every layer with a separate job to conditionally deploy the finished development image.

Challenges

Our CI solution would cancel workflows if they took more than 3 hours. Considering the base layer already took 2 hours to build, doubling that in order to create an additional M1 image would not work. The workflow would need to change so that each layer would be its own job, but that still would not work for the 2-hour build for a single layer and a single architecture. I lucked out in that while investigating ways to speed up the OS layer build, I discovered the packages that were being built from scratch actually had pre-built solutions for the Alpine OS version we were using. By using the pre-built libraries instead of building from source, what had once been a two hour build-from-scratch became a dozen-minute download and configure layer instead.

The build-time issue had been solved for the base OS layer but the next hurdle was actually getting “buildx” to execute using CircleCI’s machine images. A script was developed to install the most recent version of “buildx”. Additional scripts install emulators for other architectures such as linux/arm64 for Apple’s M1s. Thanks to the Docker documentation for spelling that out, as it was not simple nor intuitive to accomplish. This process worked well enough for the base OS layer, but the middle layer which installed all the Python dependencies became an issue. Apparently, QEMU emulation for building and installing Python dependencies is ultra slow and posts like this one were many. The time needed seemed to grow exponentially and I wasted days trying to optimize for speed but ran out of ideas besides creating more dependency Docker layers. Splitting up the library layer into who-knows how many multiple smaller layers just to get around this speed quicksand was not something I was looking forward to as a solution. It was at this point when I decided to re-check my assumptions in case I overlooked something basic. Maybe it was time to look into “the harder way” which had been the solution before “buildx” made things easy.

My scripts for getting Docker’s “buildx” to work on an Ubuntu machine image in CircleCI:

####################

# Is the Multi-architecture Docker builder, buildx, installed.

function multiArch_isBuildx()

{

if [ -f "/usr/share/keyrings/docker-archive-keyring.gpg" ]; then

echo "1"

fi

}

####################

# Multi-architecture Docker images require some bleeding edge software, buildx.

# Use this function to install Docker `buildx`.

# @see https://docs.docker.com/engine/install/ubuntu/

# Used with machine image: ubuntu-2004:202111-02 (Ubuntu 20.04, revision: Nov 02, 2021)

function multiArch_installBuildx()

{

# Uninstall older versions of Docker, ok if it reports none are installed.

sudo apt-get remove docker docker-engine docker.io containerd runc;

# install using repository

sudo apt-get update && sudo apt-get install

ca-certificates

curl

gnupg

lsb-release;

# add Docker's official GPG key

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg;

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu

$(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null;

# install docker engine

sudo apt-get update && sudo apt-get install docker-ce docker-ce-cli containerd.io;

# test engine installation

sudo docker run hello-world;

}

####################

# Multi-architecture Docker images require some bleeding edge software, buildx.

# Use this function to install arm64 architecture.

# @see https://docs.docker.com/engine/install/ubuntu/

# Used with machine image: ubuntu-2004:202111-02 (Ubuntu 20.04, revision: Nov 02, 2021)

# @ENV USER - the circleci user that executes commands.

function multiArch_addArm64Arch()

{

sudo apt-get update && sudo apt-get install

apt-transport-https

ca-certificates

curl

gnupg-agent

software-properties-common;

sudo add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable"

sudo apt-get update && sudo apt-get install docker-ce docker-ce-cli containerd.io;

# so we don't have to use sudo

sudo groupadd docker && sudo usermod -aG docker $USER && newgrp docker;

# emulation deps

sudo apt-get install binfmt-support qemu-user-static;

# test engine installation again to show what arch it supports

sudo docker run hello-world;

}

####################

# Multi-architecture Docker images require some bleeding edge software, buildx.

# Use this function to create and use a new builder context.

# @see https://docs.docker.com/engine/install/ubuntu/

# Used with machine image: ubuntu-2004:202111-02 (Ubuntu 20.04, revision: Nov 02, 2021)

# @param string $1 - (OPTIONAL) the name of the context to use (defaults to 'mabuilder').

function multiArch_createBuilderContext()

{

DOCKER_CONTEXT=${1:-mabuilder};

docker context create "${DOCKER_CONTEXT}";

docker buildx create "${DOCKER_CONTEXT}" --use;

}

and a workflow utilizing them

build-example:

machine:

image: ubuntu-2004:202111-02

steps:

- checkout

- run:

name: Install Docker Buildx for Multi-Architectures

command: |-

source scm/utils.sh; multiArch_installBuildx

source scm/utils.sh; multiArch_addArm64Arch

source scm/utils.sh; multiArch_createBuilderContext

- run:

name: Build My Stuff

command: <buildx command>

The Eventual Solution Employed

Our CI solution already supported workflows consisting of multiple jobs which could be executed in parallel where possible. Everything I’d been reading/watching online about mutli-architecture builds claimed “buildx” was the easy way and that the “old way” was just too hard and therefore never discussed. I did not relish looking for how images were constructed prior to “buildx”, but I eventually found Jeremie Drouet’s post and it completely changed my approached based on how easy it was to accomplish via CircleCI even though it was deemed “harder”.

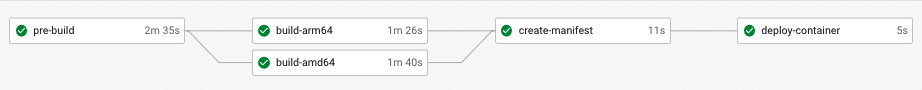

The CI workflow now employs three jobs, the first two which run in parallel on different machine architectures and the third one which creates the manifest needed to “merge” them into one DockerHub image. Basically, you create a job to build the Docker image as you normally would, but with the additional build argument supplied based on which host platform the CI job is using: --build-arg "ARCH=amd64/" for the traditional Intel build and --build-arg "ARCH=arm64/" for the M1 version. Since they are separate workflow jobs, they can be on different host machines. There is no speed issue because no emulation is involved. Once both jobs finish, the third job runs to create and push the manifest which links the two images as a “single Docker tagged image”. While this process is not considered “the easy way” for an individual developer lacking multiple available host machine architectures, it is by far the easiest means for CI solutions which have no such limitations.

Without going into too much detail, the scripts I use in my CI solution only needed minor tweaks compared to what it took to get “buildx” to work:

####################

# Determine the image tag for Python Libs image based on its requirements file(s).

# Ensure the docker image tagged with the special tag exists; build if needed.

# @param string $1 - the image stage string to use, "pylibs" if empty.

function EnsurePyLibsImageExists()

{

IMAGE_NAME="${DEFAULT_IMAGE_NAME}"

IMG_STAGE=${1:-"pylibs"}

DOCKERFILE2USE="docker/dfstage-${IMG_STAGE%-*}.dockerfile"

IMAGE_TAG_HASH=$(CalcFileArgsMD5 "${DOCKERFILE2USE}" "$(GetImgStageFile "base")" "poetry.lock" "pyproject.toml" "package-lock.json" "package.json")

IMAGE_TAG="${IMG_STAGE}-${IMAGE_TAG_HASH}"

echo "${IMAGE_TAG}" > "${WORKSPACE}/info/${IMG_STAGE}_tag.txt"

if ! DockerImageTagExists "${IMAGE_NAME}" "${IMAGE_TAG}"; then

FROM_STAGE_TAG=$(GetImgStageTag "base")

PrintPaddedTextRight " Using Base Tag" "${FROM_STAGE_TAG}" "${COLOR_MSG_INFO}"

echo "Building Docker container ${IMAGE_NAME}:${IMAGE_TAG}…"

buildImage "${IMAGE_NAME}" "${IMAGE_TAG}" "${DOCKERFILE2USE}"

--build-arg "FROM_STAGE=${IMAGE_NAME}:${FROM_STAGE_TAG}"

--build-arg "ARCH=${IMG_STAGE##*-}/"

"${UTILS_PATH}/pr-comment.sh" "Python/NPM Libs Image built: ${IMAGE_NAME}:${IMAGE_TAG}"

fi

PrintPaddedTextRight "Using Python/NPM Libs Image Tag" "${IMAGE_TAG}" "${COLOR_MSG_INFO}"

}

####################

# Determine the image tag for Python Libs image based on its requirements file(s).

# Ensure the docker image tagged with the special tag exists; build if needed.

function EnsurePyLibsManifestExists()

{

IMAGE_NAME="${DEFAULT_IMAGE_NAME}"

IMG_STAGE="pylibs"

DOCKERFILE2USE="docker/dfstage-${IMG_STAGE}.dockerfile"

IMAGE_TAG_HASH=$(CalcFileArgsMD5 "${DOCKERFILE2USE}" "$(GetImgStageFile "base")" "poetry.lock" "pyproject.toml" "package-lock.json" "package.json")

IMAGE_TAG="${IMG_STAGE}-${IMAGE_TAG_HASH}"

echo "${IMAGE_TAG}" > "${WORKSPACE}/info/${IMG_STAGE}_tag.txt"

if ! DockerImageTagExists "${IMAGE_NAME}" "${IMAGE_TAG}"; then

IMG1_TAG=$(cat "${WORKSPACE}/info/pylibs-amd64_tag.txt")

IMG2_TAG=$(cat "${WORKSPACE}/info/pylibs-arm64_tag.txt")

STAGE_HASH="${IMG1_TAG##*-}"

IMG_NAME="${DEFAULT_IMAGE_NAME}"

IMG_TAG="pylibs-${STAGE_HASH}"

echo "${IMG_TAG}" > "${WORKSPACE}/info/pylibs_tag.txt"

docker manifest create "${IMG_NAME}:${IMG_TAG}"

--amend "${IMG_NAME}:${IMG1_TAG}"

--amend "${IMG_NAME}:${IMG2_TAG}"

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}";

docker manifest push "${IMG_NAME}:${IMG_TAG}"

fi

PrintPaddedTextRight "Using Python/NPM Libs Image Tag" "${IMAGE_TAG}" "${COLOR_MSG_INFO}"

}

The CircleCI configuration snippet with the three workflow jobs discussed here utilize these functions. Note how similar the non-manifest jobs are with the only difference being the resource_class used (amd vs arm) and the image tag it creates. The manifest job then just combines these image tags into one image tag manifest, but it does not need to create an actual image with that tag, so it executes in mere seconds.

build-stage-pylibs-amd64:

machine:

image: ubuntu-2004:202111-02

resource_class: medium

steps:

- checkout

- attach_workspace:

at: workspace

- run:

name: Ensure Pylibs-amd64 Image Exists

command: |-

source scm/utils.sh; EnsurePyLibsImageExists "pylibs-amd64";

- persist_to_workspace:

root: workspace

paths:

- info/pylibs-amd64_tag.txt

build-stage-pylibs-arm64:

machine:

image: ubuntu-2004:202111-02

resource_class: arm.medium

steps:

- checkout

- attach_workspace:

at: workspace

- run:

name: Ensure Pylibs-arm64 Image Exists

command: |-

source scm/utils.sh; EnsurePyLibsImageExists "pylibs-arm64";

- persist_to_workspace:

root: workspace

paths:

- info/pylibs-arm64_tag.txt

build-stage-pylibs-manifest:

machine:

image: ubuntu-2004:202111-02

steps:

- checkout

- attach_workspace:

at: workspace

- run:

name: Ensure Pylibs Image Manifest Exists

command: |-

source scm/utils.sh; EnsurePyLibsManifestExists;

- persist_to_workspace:

root: workspace

paths:

- info/pylibs_tag.txt

While my discovery process with a sophisticated build is all well and good, a much simpler project applying the lessons learned will probably be more helpful to follow along as well.

Simple Case, Step by Step

A typical simple CI workflow containing a single job with multiple steps. These steps test, build, and deploy a Docker image.

version: 2

jobs:

build:

docker:

- image: cimg/base:stable

steps:

- checkout

- setup_remote_docker:

version: 20.10.7

- run:

name: "Run go tests"

command: |-

docker build -t ci_test -f Dockerfile.test . && docker run ci_test

- run:

name: "Build and push Docker image"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

BRANCH=${CIRCLE_BRANCH#*/}

if [[ ! -z $CIRCLE_TAG ]]; then

VERSION_TAG="${CIRCLE_TAG#*v}"

else

VERSION_TAG="ci-`cat VERSION.txt`-dev-${BRANCH}"

fi

docker build --build-arg VERSION=${VERSION_TAG}

-t $IMAGE_NAME:$VERSION_TAG -f Dockerfile .

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker push $IMAGE_NAME:$VERSION_TAG

- run:

name: Deploy

command: |-

BRANCH=${CIRCLE_BRANCH#*/}

if [[ $BRANCH == develop ]]; then

<!-- deploy steps... -->

else

echo "Skipping deploy step"

fi

workflows:

version: 2

application:

jobs:

- build:

context: globalconfig

filters:

tags:

ignore:

- /^test-.*/

We need to break up the various steps into their own jobs in the workflow in order to best utilize parallel architecture build jobs. First, let us refactor the “deploy” step into its own workflow job to get a feel for how that’s done. Notice how we need to duplicate how we figure out the VERSION_TAG in both the “build” and “deploy” jobs as ENV vars will not be shared between jobs. We will address that in the next step.

version: 2

jobs:

build:

docker:

- image: cimg/base:stable

steps:

- checkout

- setup_remote_docker:

version: 20.10.7

- run:

name: "Run go tests"

command: |-

docker build -t ci_test -f Dockerfile.test . && docker run ci_test

- run:

name: "Build and push Docker image"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

BRANCH=${CIRCLE_BRANCH#*/}

if [[ ! -z $CIRCLE_TAG ]]; then

VERSION_TAG="${CIRCLE_TAG#*v}"

else

VERSION_TAG="ci-`cat VERSION.txt`-dev-${BRANCH}"

fi

docker build --build-arg VERSION=${VERSION_TAG}

-t $IMAGE_NAME:$VERSION_TAG -f Dockerfile .

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker push $IMAGE_NAME:$VERSION_TAG

deploy-container:

docker:

- image: cimg/base:stable

steps:

- checkout

- run:

name: "Deploy Container"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

BRANCH=${CIRCLE_BRANCH#*/}

if [[ ! -z $CIRCLE_TAG ]]; then

VERSION_TAG="${CIRCLE_TAG#*v}"

else

VERSION_TAG="ci-`cat VERSION.txt`-dev-${BRANCH}"

fi

<!-- deploy steps... -->

workflows:

version: 2

application:

jobs:

- build:

context: globalconfig

filters:

tags:

ignore:

- /^test-.*/

- deploy-container:

context: globalconfig

requires:

- build

filters:

branches:

only:

- /^develop$/

Now that we have factored out the deploy step into its own job, let us now refactor all the “pre-build” steps (including the test run) into a separate job. You could also make the test step be its own job, but we will leave it as part of “pre-build” for simplicity’s sake. In order to only determine the VERSION_TAG once and to save time by avoiding the need to check out code for every job, we will use CircleCI’s attach workspace mechanism to do all that during the pre-build job and have subsequent jobs attach the workspace.

version: 2

jobs:

pre-build:

docker:

- image: cimg/base:stable

steps:

- checkout

- setup_remote_docker:

version: 20.10.7

- run:

name: "Run go tests"

#no_output_timeout: 180m (default is 10m, use this to adjust)

command: |-

docker build -t ci_test -f Dockerfile.test . && docker run ci_test

- run:

name: "Determine Tag To Use"

command: |-

BRANCH=${CIRCLE_BRANCH#*/}

if [[ ! -z $CIRCLE_TAG ]]; then

VERSION_TAG="${CIRCLE_TAG#*v}"

else

VERSION_TAG="ci-`cat VERSION.txt`-dev-${BRANCH}"

fi

echo ${VERSION_TAG} > version_tag.txt

- persist_to_workspace:

root: .

paths: .

build:

docker:

- image: cimg/base:stable

steps:

- attach_workspace:

at: .

- setup_remote_docker:

version: 20.10.7

- run:

name: "Build and push Docker image"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`

docker build --build-arg VERSION=${VERSION_TAG}

-t $IMAGE_NAME:$VERSION_TAG -f Dockerfile .

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker push $IMAGE_NAME:$VERSION_TAG

deploy-container:

docker:

- image: cimg/base:stable

steps:

- attach_workspace:

at: .

- run:

name: "Deploy Container"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`

<!-- deploy steps... -->

workflows:

version: 2

application:

jobs:

- pre-build:

context: globalconfig

filters: &build-filters

tags:

ignore:

- /^test-.*/

- build:

context: globalconfig

filters: *build-filters

- deploy-container:

context: globalconfig

requires:

- build

filters:

branches:

only:

- /^develop$/

Once we have this workflow executing successfully, the final refactor is to break up the build job into three separate jobs, two of which run in parallel. The amd64 and arm64 build jobs both require the “pre-build” job which means a test failure will not create any image, but once the “pre-build” job finishes successfully, both of the build jobs will execute simultaneously on different hardware — one for Intel and one for M1 architecture. The four differences from the previous “build” jobs will be:

- the appending of the architecture type to the IMAGE_TAG

- the docker build argument

--build-arg "ARCH=${VERSION_TAG##*-}/"added to the build - the CircleCI machine image

- the resource class to use

Everything else about the build step says the same. Please note that while the ARCH build argument is not strictly necessary and will result in a [Warning] One or more build-args [ARCH] were not consumed warning, it is good practice to include the build argument so that if you need to alter the Dockerfile for any reason due to the architecture being built, its already being passed in to be utilized. If you need to modify your Dockerfile so that the new architecture adds a library the amd64 one did not need, you can easily add some code to check the ARCH argument.

ARG ARCH

RUN if [ "${ARCH%/*}" = "arm64" ]; then

apt-get update && apt-get install -y librdkafka1 librdkafka-dev ;

else

echo "skipping librdkafka install, not needed for ${ARCH%/*}" ;

fi

The third job will create the manifest tying both of the architecture builds together as a “single image” accessed with the original image tag and is dependent on both images being created successfully. We also modify the deploy job to be dependent on this new manifest job instead of the old “build” job we removed.

version: 2

jobs:

pre-build:

docker:

- image: cimg/base:stable

steps:

- checkout

- setup_remote_docker:

version: 20.10.7

- run:

name: "Run go tests"

#no_output_timeout: 180m (default is 10m, use this to adjust)

command: |-

docker build -t ci_test -f Dockerfile.test . && docker run ci_test

- run:

name: "Determine Tag To Use"

command: |-

BRANCH=${CIRCLE_BRANCH#*/}

if [[ ! -z $CIRCLE_TAG ]]; then

VERSION_TAG="${CIRCLE_TAG#*v}"

else

VERSION_TAG="ci-`cat VERSION.txt`-dev-${BRANCH}"

fi

echo ${VERSION_TAG} > version_tag.txt

- persist_to_workspace:

root: .

paths: .

build-amd64:

machine:

image: ubuntu-2004:202111-02

resource_class: medium

steps:

- attach_workspace:

at: .

- run:

name: "Build and push Docker image (amd64)"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`-amd64

docker build --build-arg VERSION=${VERSION_TAG}

--build-arg "ARCH=${VERSION_TAG##*-}/"

-t $IMAGE_NAME:$VERSION_TAG -f Dockerfile .

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker push $IMAGE_NAME:$VERSION_TAG

build-arm64:

machine:

image: ubuntu-2004:202111-02

resource_class: arm.medium

steps:

- attach_workspace:

at: .

- run:

name: "Build and push Docker image (arm64)"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`-arm64

docker build --build-arg VERSION=${VERSION_TAG}

--build-arg "ARCH=${VERSION_TAG##*-}/"

-t $IMAGE_NAME:$VERSION_TAG -f Dockerfile .

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker push $IMAGE_NAME:$VERSION_TAG

create-manifest:

docker:

- image: cimg/base:stable

steps:

- attach_workspace:

at: .

- setup_remote_docker:

version: 20.10.7

- run:

name: "Create and Push Docker Manifest"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`

docker manifest create "${IMAGE_NAME}:${VERSION_TAG}"

--amend "${IMAGE_NAME}:${VERSION_TAG}-amd64"

--amend "${IMAGE_NAME}:${VERSION_TAG}-arm64"

docker login -u "${DOCKER_USER}" -p "${DOCKER_PASS}"

docker manifest push "${IMAGE_NAME}:${VERSION_TAG}"

deploy-container:

docker:

- image: cimg/base:stable

steps:

- attach_workspace:

at: .

- run:

name: "Deploy Container"

command: |-

IMAGE_NAME=${CIRCLE_PROJECT_USERNAME}/${CIRCLE_PROJECT_REPONAME}

VERSION_TAG=`cat version_tag.txt`

<!-- deploy steps... -->

workflows:

version: 2

application:

jobs:

- pre-build:

context: globalconfig

filters: &build-filters

tags:

ignore:

- /^test-.*/

- build-amd64:

context: globalconfig

requires:

- pre-build

filters: *build-filters

- build-arm64:

context: globalconfig

requires:

- pre-build

filters: *build-filters

- create-manifest:

context: globalconfig

requires:

- build-amd64

- build-arm64

filters: *build-filters

- deploy-container:

context: globalconfig

requires:

- create-manifest

filters:

branches:

only:

- /^develop$/

Once you have refactored the workflow, and assuming your new M1 job finishes successfully, your new images will now support both Intel and M1 architectures while not taking any more time than they would have before the refactor.